Role of Tensors and Linear Algebra in GPU

11 October, 2023

Linear algebra, deep learning, and GPU architectures are fundamentally interlinked.

Linear algebra, the math of matrices and tensors, forms the foundation of deep learning. GPUs, initially tailored for graphics, have evolved into stellar tools for executing linear algebra operations.

Mathematics of Tensors

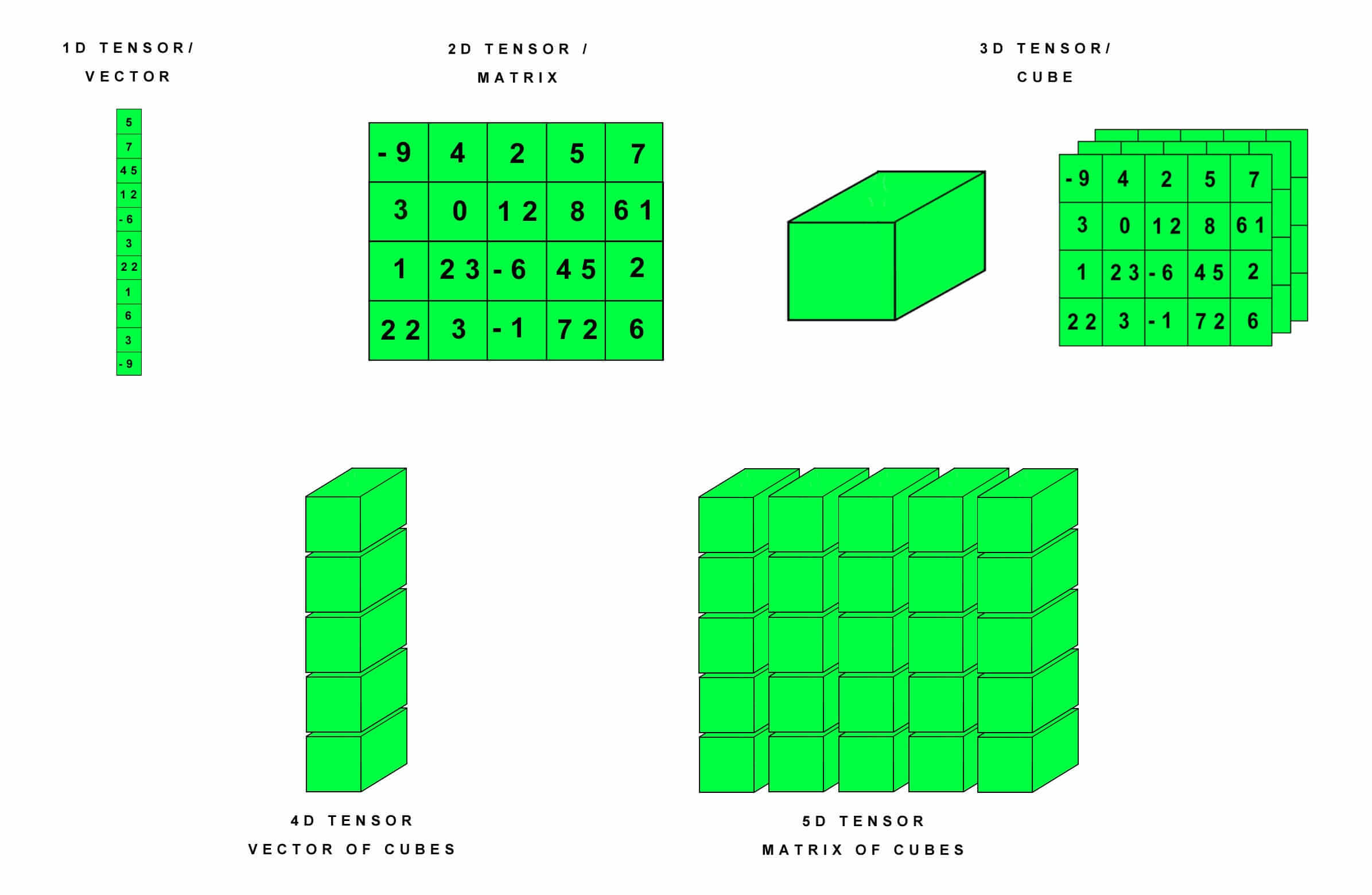

Tensors, the stars of the show, are versatile arrays that can handle everything from basic numbers to complex matrices. They unite data, weights, biases, and the numerical soul of neural networks.

0th-Order Tensor: A solo player, like a single number.

1st-Order Tensor: A team of numbers, marching in order, a.k.a. a vector.

2nd-Order Tensor: A grid of numbers, a matrix with rows and columns.

Higher-Order Tensor: Imagine 3D and beyond, generalizing vectors, and matrices.

The Tensor Versatility

Tensors can represent text, images, videos, and do the heavy lifting for training neural networks. Their arsenal includes addition, matrix multiplication, transposing, contraction, reshaping, and slicing.

Tensors in Neural Networks

Every layer in a neural network dances to the tune of tensor operations. Key algorithms like backpropagation and gradient descent rely on these tensor moves.

In a nutshell, it's the numbers that fuel the deep learning journey, with linear algebra as the navigator, GPUs as the engine, and tensors as the versatile workhorses.

Tensors for GPU Parallel Power

Tensors are your key to parallel computation, and here's the magic: GPUs excel at parallelism like no other.

CPUs boast a few potent cores tuned for one-at-a-time tasks. On the flip side, GPUs flaunt thousands of nimble, smaller cores ready to tackle operations simultaneously.

In the world of computing, parallelism is where the party's at, and GPUs are the life of that party!

A Perfect Duo of Tensors and GPUs

Leveraging Parallel Power

Tensors and GPUs are a match made in computational heaven. Why? Because the operations on tensors, especially the more complex ones, can be sliced into numerous small, independent tasks. This harmony aligns perfectly with GPUs' architecture, which can run thousands of these tasks side by side.

Memory Magic

GPUs bring their memory A-game, sporting a hierarchy of global, shared, and local memory. Mastering this hierarchy while working with tensors can lead to impressive efficiency gains.

Optimization Frameworks and Lipbraries

Libraries like cuBLAS and cuDNN are the secret sauce. They're tailor-made for GPU-accelerated tensor operations. Top-tier frameworks like TensorFlow and PyTorch build on these libraries, providing a user-friendly abstraction layer.

Deep Learning Batching

Deep learning loves batches. Think of it as serving up a platter of data points and applying the same recipe to all of them at once. GPUs are in their element here, handling this parallelism like pros.

Combined Magic

Thanks to software libraries and frameworks, building AI is easier than ever. With just a few lines of code, you can tango with your favorite neural network. In the background, GPUs work their magic, crunching tensor operations and conjuring patterns from your data.

In the world of AI, it's the dynamic dance between tensors and GPUs that makes the magic happen.